AI Bias: How Algorithms Inherit Human Prejudices and What It Means for Us

Explore how AI algorithms can inherit human biases and the impact of these prejudices on society. This article breaks down AI bias, real-world examples, and what can be done to create fairer AI systems.

Author

D Team

30 Aug 2024

Artificial Intelligence (AI) is often seen as an impartial decision-maker, free from the emotional and irrational biases that humans have. However, the reality is far from perfect. AI can inherit the biases of the data it is trained on, leading to discriminatory outcomes that can affect everything from job applications to criminal sentencing. In this article, we’ll explore how AI bias occurs, real-world examples of its impact, and what we can do to ensure AI works fairly for everyone.

What is AI Bias?

AI bias occurs when algorithms produce results that are systematically prejudiced due to flawed assumptions in the machine learning process. This usually happens when AI models are trained on biased data that reflects human prejudices, whether they are based on race, gender, age, or other factors.

Analogy: The AI Mirror Effect

Think of AI as a mirror that reflects the data it’s given. If the data is biased, AI will reflect those biases in its decisions. For instance, if an AI is trained on historical hiring data that favors male candidates, it will continue to favor men in future job screenings, perpetuating gender bias.

How AI Inherits Human Biases: The Learning Process

AI learns by identifying patterns in the data it’s fed. If that data contains human biases, such as racial or gender disparities, the AI will learn these biases as patterns to be followed.

1. Data Collection and Labeling Biases

Example: Facial recognition software has been criticized for misidentifying people of color more frequently than white individuals. This is often because the data used to train these algorithms contains a disproportionate number of white faces, making the AI less accurate when recognizing other races.

Expert Quote: “When your training data is biased, your AI outcomes will be too. It’s like teaching a child only half of the alphabet and expecting them to read fluently,” says Dr. Maria Johnson, an AI ethics researcher.

2. Historical and Societal Biases Embedded in Data

Example: AI systems used in criminal justice, like predictive policing, can end up targeting certain communities more heavily because the data reflects existing prejudices in law enforcement practices.

Case Study: In 2016, an AI used by a court in the U.S. was found to wrongly label Black defendants as high-risk at twice the rate of white defendants. This was due to biased historical data that was used to train the model.

3. Algorithm Design Bias

Sometimes, the way algorithms are designed can unintentionally prioritize certain outcomes over others. This can happen when developers inadvertently embed their own biases into the coding process.

Example: AI used in credit scoring may favor certain socioeconomic factors that correlate with race or gender, leading to discriminatory lending practices.

Real-World Examples of AI Bias

1. Biased Hiring Algorithms

Example: In 2018, a major tech company discontinued its AI hiring tool after discovering that it discriminated against female applicants. The AI was trained on resumes submitted over a decade, most of which came from men, leading the system to favor male candidates.

2. Healthcare Disparities

Example: An AI tool used to prioritize patients for extra care was found to favor white patients over Black patients. The AI based its decisions on healthcare spending, which does not equally reflect healthcare needs across different racial groups.

3. Social Media Content Moderation

Example: Social media platforms often use AI to flag inappropriate content. However, these algorithms can be biased against certain languages or cultural expressions, resulting in uneven enforcement of rules.

The Impact of AI Bias on Society

AI bias doesn’t just affect individual decisions—it can have wide-ranging societal impacts. Biased algorithms can reinforce existing inequalities, perpetuate stereotypes, and undermine public trust in AI technologies.

1. Economic Impacts

Issue: Biased AI in hiring or credit scoring can limit economic opportunities for certain groups, widening the wealth gap.

2. Legal and Ethical Concerns

Issue: AI used in law enforcement or legal settings can lead to unfair treatment, wrongful accusations, or biased sentencing, challenging the integrity of justice systems.

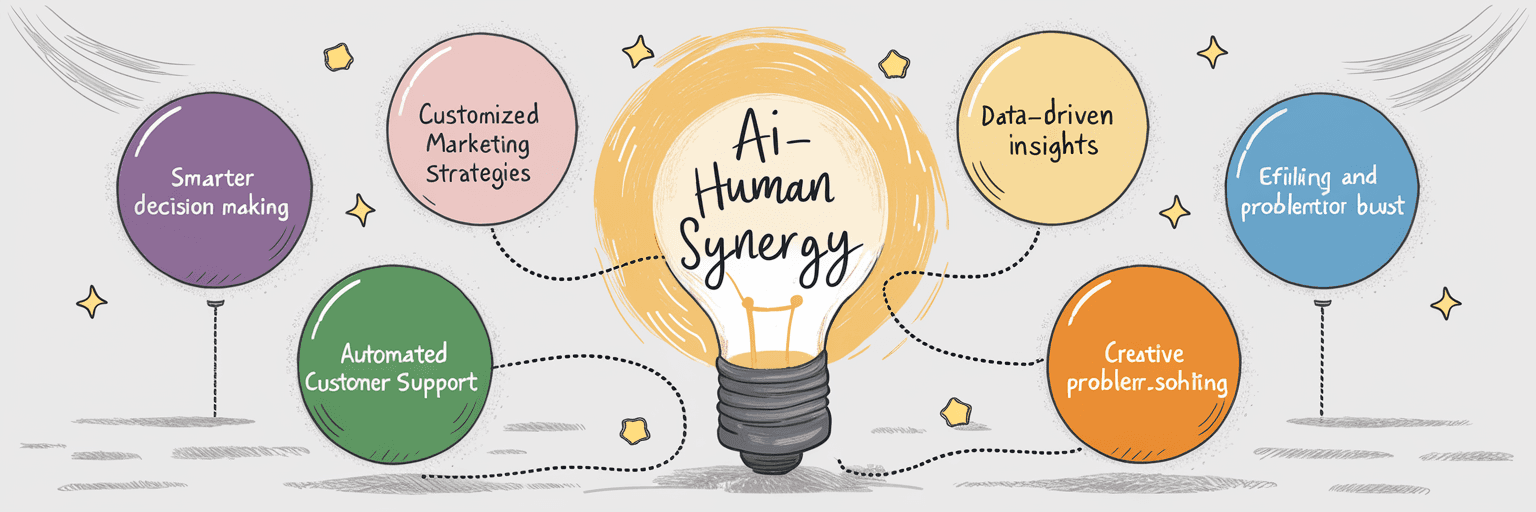

Fighting AI Bias: What Can Be Done?

1. Diverse Data Collection

Collecting diverse data sets that represent all groups fairly is crucial. This means actively seeking data that reflects different genders, races, ages, and socioeconomic backgrounds.

2. Transparency and Accountability in AI Development

Developers should be transparent about how AI models are built, what data is used, and how decisions are made. This includes regularly auditing algorithms to check for biases.

3. Human Oversight

Implementing human oversight in decision-making processes where AI is used can help catch biased outcomes before they cause harm.

4. Bias Mitigation Techniques

Techniques like reweighting data, using adversarial debiasing, and implementing fairness-aware algorithms can help reduce biases in AI.

From a Research-Driven Perspective

AI bias represents a critical challenge in the development and deployment of intelligent systems. Understanding the sources of bias and actively working to mitigate them is essential for building AI that is fair, transparent, and trustworthy. From better data practices to rigorous auditing, the path forward involves a commitment to ethical AI design that serves all of society equitably.

Final Thoughts

AI’s power to influence decisions in our lives is growing, but so is its potential to reflect and amplify human prejudices. The challenge is not just technological—it’s deeply ethical and societal. By acknowledging and addressing AI bias, we can harness the full potential of AI in ways that are fair and just, ensuring that these powerful tools serve to uplift rather than divide.

Understanding AI bias is the first step in creating algorithms that truly benefit everyone. The journey to unbiased AI is ongoing, but with continued efforts, we can strive towards more equitable and inclusive AI systems.