How Does GPT-4 Work? A Simple Explanation of Large Language Models

Discover how GPT-4 works with a simple, step-by-step explanation of large language models.

Author

D Team

28 Aug 2024

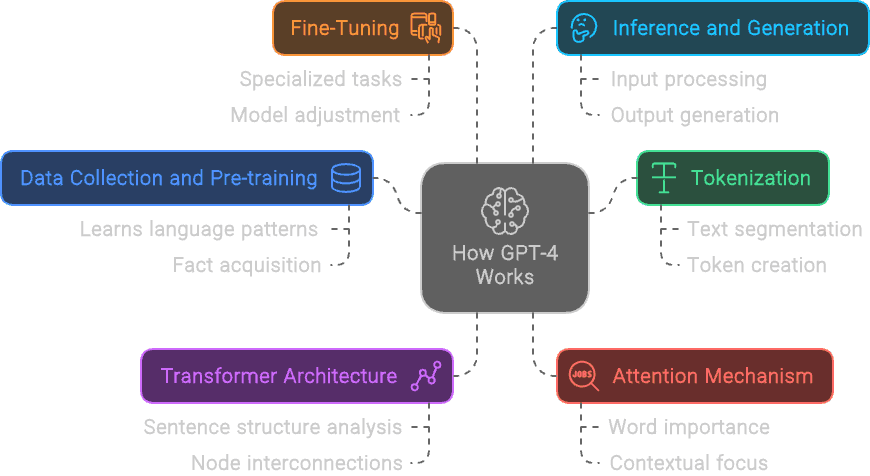

Large language models (LLMs) like GPT-4 are transforming how we interact with technology, providing impressive capabilities in understanding and generating human-like text. However, the inner workings of these models can seem complex and mysterious. This article aims to break down how GPT-4 functions, offering a straightforward explanation that anyone can understand. Additionally, a flowchart will be included to help visualize the core processes that make GPT-4 work.

What is GPT-4?

GPT-4 (Generative Pre-trained Transformer 4) is the latest iteration of OpenAI’s language model series. It’s designed to understand and generate text that closely resembles human writing. GPT-4 is capable of answering questions, creating content, translating languages, and even engaging in conversations with an impressive level of coherence and context-awareness.

Key Features of GPT-4:

Contextual Understanding: GPT-4 can understand the context of a conversation or text input, allowing it to provide relevant and meaningful responses.

Text Generation: It generates text by predicting the next word in a sequence, based on the input it receives.

Versatility: GPT-4 can handle a wide range of tasks, including creative writing, technical explanations, summarization, and more.

How GPT-4 Works: Step-by-Step Explanation

Understanding GPT-4 involves breaking down its operation into a series of interconnected steps. Here’s a simplified overview:

Data Collection and Pre-training:

GPT-4 is trained on vast amounts of text data sourced from books, articles, websites, and other written content.

The model learns language patterns, grammar, facts, and reasoning by analyzing billions of sentences.

Tokenization:

Before processing, the input text is broken down into smaller units called tokens. These tokens can be words, phrases, or even individual characters, depending on the context.

Tokenization helps the model handle text more efficiently, allowing it to understand and generate language accurately.

Transformer Architecture:

The core of GPT-4 is its transformer architecture, which uses attention mechanisms to determine the relationships between words in a sentence.

This architecture allows GPT-4 to focus on relevant parts of the text, understanding context and generating coherent responses.

Attention Mechanism (Self-Attention):

GPT-4 uses self-attention to weigh the importance of each word relative to others in a sentence, helping it understand context and meaning.

For example, in the sentence "The cat sat on the mat," self-attention helps the model understand that "the mat" is the object associated with "sat."

Fine-Tuning:

After pre-training, GPT-4 undergoes fine-tuning on specific datasets that align with its intended use, such as conversational data for chat applications.

Fine-tuning helps refine the model’s responses, making them more accurate and contextually appropriate.

Inference and Generation:

During operation, GPT-4 generates text by predicting the next token in a sequence, using its understanding of language patterns learned during training.

The model continually adjusts its predictions based on user input, enabling dynamic and context-aware conversations.

Understanding GPT-4’s Strengths and Limitations

GPT-4, as one of the most advanced large language models, has brought significant advancements in AI-driven text generation and understanding. However, like all technologies, it comes with its own set of strengths and limitations that affect how it can be used effectively. Here’s a deeper look at what makes GPT-4 powerful and where its challenges lie.

Strengths:

Speed and Efficiency:

GPT-4 processes and generates text in real time, making it highly efficient for tasks that require quick responses. It can handle large amounts of data and generate coherent text within seconds, making it invaluable for real-time customer support, content creation, and other fast-paced applications.

Versatility:

One of GPT-4’s standout features is its ability to perform a wide range of tasks without needing specific reprogramming. Whether it’s drafting an email, writing poetry, answering technical questions, or creating conversational dialogue, GPT-4 adapts to different contexts seamlessly.

Language Comprehension:

GPT-4’s transformer architecture allows it to understand context better than previous models, providing relevant responses that closely align with user input. This makes it highly effective in tasks requiring context retention, such as long-form writing or complex conversations.

Scalability:

GPT-4 can be deployed across various platforms and industries, from small-scale personal assistants to large-scale enterprise solutions. Its ability to scale means it can handle everything from personalized interactions to massive data-driven applications.

Creativity and Innovation:

Beyond simple data processing, GPT-4 shows a capacity for creativity. It can generate imaginative stories, craft unique marketing copy, and offer novel ideas, making it a valuable tool for creative professionals looking to brainstorm or develop content.

Learning from Massive Data:

GPT-4 is trained on a vast and diverse range of internet data, which allows it to generate text that reflects a broad spectrum of human knowledge, styles, and perspectives. This extensive training enables the model to produce detailed, informative, and relevant responses across numerous fields.

Error Reduction Through Iteration:

GPT-4’s ability to iterate on its responses based on feedback means that it can refine outputs, reducing errors over time. This iterative approach is particularly useful in applications where initial outputs are used as drafts for further human editing.

Accessibility:

GPT-4 makes advanced AI capabilities accessible to those without technical expertise. It allows users to interact with sophisticated language models through intuitive interfaces, democratizing access to AI-powered tools and enhancing productivity.

Support for Multilingual Content:

GPT-4’s language capabilities extend beyond English, supporting multiple languages with varying degrees of fluency. This multilingual support makes it a powerful tool for global communication, content translation, and cross-cultural dialogue.

Enhanced Contextual Awareness:

GPT-4 excels at maintaining context over long interactions, allowing it to handle complex questions and sustain meaningful conversations without losing track of earlier points. This contextual continuity is crucial for applications like interactive storytelling and long-form Q&A sessions.

Limitations:

Lack of True Understanding:

Despite its impressive outputs, GPT-4 does not genuinely understand the text it generates. It operates on statistical correlations learned during training rather than possessing true comprehension, meaning it can sometimes provide responses that sound logical but lack factual accuracy or deeper meaning.

Potential for Bias:

GPT-4 is trained on vast, uncurated internet data that includes biased, misleading, or harmful content. As a result, the model can unintentionally generate outputs that reflect these biases, posing risks in sensitive contexts, such as healthcare, finance, or law.

Dependence on Training Data Quality:

The quality of GPT-4’s responses heavily depends on the data it was trained on. Since it cannot access real-time data or updates, it might provide outdated or incorrect information, particularly in rapidly evolving fields like current events or technology.

Limited Common Sense Reasoning:

While GPT-4 can generate text that appears intelligent, it often lacks common sense reasoning. This can result in nonsensical or overly literal responses when faced with tasks that require understanding abstract concepts, idioms, or nuanced human behavior.

Inability to Verify Information:

GPT-4 generates responses based on its training data and cannot verify facts in real-time. It doesn’t have access to live sources or databases, meaning it cannot provide updated or fact-checked information, especially when handling questions about recent events.

Tendency to Hallucinate:

GPT-4 can sometimes produce information that is entirely fabricated, known as “hallucination.” This phenomenon occurs when the model generates plausible-sounding text that has no basis in reality, which can mislead users who assume the information is accurate.

Sensitivity to Input Phrasing:

The model’s outputs can vary significantly based on how questions or prompts are phrased. Slight changes in wording can lead to vastly different answers, which complicates its use in applications requiring consistent and reliable information.

Limited Emotional Intelligence:

GPT-4 can simulate empathy or understanding in its responses, but it does not possess true emotional intelligence. It may struggle to provide appropriate support in emotionally charged interactions, such as those in mental health or customer service contexts.

Resource-Intensive:

Running and training large models like GPT-4 require significant computational power, which can be costly and environmentally demanding. This resource intensity limits its deployment in settings where computational efficiency and sustainability are priorities.

No Moral or Ethical Judgment:

GPT-4 does not have a built-in sense of morality or ethics. It can inadvertently generate inappropriate or harmful content if not carefully guided by user constraints or ethical filters.

Vulnerability to Exploitation:

The model can be manipulated to generate harmful content, misinformation, or malicious code if misused. Its accessibility raises concerns about potential exploitation in generating spam, phishing attempts, or other nefarious activities.

Poor Performance on Complex Math or Logic:

While GPT-4 can handle simple calculations, it often struggles with complex math problems, logic puzzles, or tasks that require step-by-step reasoning. This limitation reduces its effectiveness in applications that demand precise and reliable quantitative analysis.

Lack of Long-Term Memory:

GPT-4 does not retain information between sessions, meaning it cannot remember previous conversations or learn from past interactions unless explicitly designed with a system that feeds historical context into the session. This limits its effectiveness in tasks requiring continuity.

Conclusion:

GPT-4 is a powerful tool with impressive strengths in speed, versatility, and creativity. However, it also has significant limitations, particularly in areas requiring deep understanding, ethical judgment, and factual accuracy. Understanding both its strengths and weaknesses allows users to leverage GPT-4 effectively while remaining mindful of its constraints, ensuring it is applied in contexts where its capabilities align with the task at hand.