How Large Language Models (LLMs) Understand the Meaning of Words

Discover how Large Language Models (LLMs) like GPT-4 understand the meaning of words. This article breaks down the process in simple terms, using mathematics where necessary, to explain how AI models comprehend language

Author

D Team

31 Aug 2024

How Large Language Models (LLMs) Understand the Meaning of Words

Artificial Intelligence, especially in the form of Large Language Models (LLMs) like GPT-4, has become a hot topic. One of the most fascinating aspects of LLMs is their ability to understand and generate human-like text. But how do these models "understand" the meaning of words? Is it magic, or is there a scientific explanation? Let's break it down in simple terms with a touch of mathematics to keep it interesting.

1. What Are LLMs and Why Are They Impressive?

Large Language Models are a type of AI model trained to process, understand, and generate natural language. Models like GPT-4 are powered by neural networks, which mimic the human brain's structure to a certain extent. They are trained on vast datasets containing text from books, articles, websites, and more. These models learn patterns, associations, and even context from this massive data, enabling them to generate coherent and contextually appropriate text.

What makes LLMs impressive is their ability to:

Generate text that is grammatically correct and contextually relevant.

Understand and maintain context over long paragraphs.

Answer questions, summarize information, and even engage in conversation.

However, behind this apparent "understanding" lies a complex mathematical foundation. Let's explore how LLMs work with a focus on how they interpret the meaning of words.

2. The Building Blocks: Words as Mathematical Representations

LLMs don't understand words the way humans do. Instead, they rely on mathematical representations to grasp the concept of a word. These representations are called word embeddings.

Word Embeddings: Imagine every word in the dictionary being plotted in a multi-dimensional space where each word is a point. The position of each word in this space is determined by its relationship to other words. Words with similar meanings or contexts end up closer together (e.g., "king" and "queen") while unrelated words are farther apart (e.g., "king" and "banana").

These word embeddings are created using techniques like Word2Vec, GloVe, or more advanced methods built into LLMs. The mathematics behind this involves linear algebra and statistics, but let's break it down further.

3. Math in Simple Terms: Vectors and Word Relationships

To understand word embeddings, think of each word as a vector—a mathematical object that has both a direction and a magnitude. Here’s a simplified explanation:

Vector Representation: In mathematics, a vector can be thought of as an arrow in a multi-dimensional space. For example, the word "cat" might be represented by a vector [0.1, 0.2, 0.5, 0.7,...] in a 300-dimensional space.

Cosine Similarity: The "meaning" of words is determined by how close these vectors are to each other in this space. The closer the vectors, the more similar the words. Cosine similarity is a measure that helps determine this closeness or "angle" between two vectors. If two words have a high cosine similarity, they are contextually related.

Example:

Imagine the words "king", "queen", "man", and "woman." If you subtract the vector for "man" from "king" and add the vector for "woman," you end up close to the vector for "queen." This is often written as:

This simple mathematical operation shows how LLMs capture the relationships between words, which is foundational for understanding meaning.

4. Contextual Understanding: More Than Just Word Embeddings

Word embeddings provide a static meaning of words based on their relationships. However, words often change meaning based on context. For instance, "bat" could refer to an animal or a piece of sports equipment, depending on the sentence.

LLMs use mechanisms like attention layers and transformers to handle this. Transformers, introduced in a famous paper titled "Attention is All You Need," help the model focus on different parts of a sentence to understand context better.

Attention Mechanism: Think of this like reading comprehension. When we read, we don’t give equal attention to every word. We focus more on certain words to understand the context. Similarly, the attention mechanism in LLMs allows the model to weigh the importance of different words in a sentence. This helps in resolving ambiguities and understanding the true intent behind the text.

For example, in the sentence, "The bat flew across the room," the model uses the context provided by the word "flew" to understand that "bat" refers to the animal, not a baseball bat.

5. Fine-Tuning and Learning: Adapting to New Information

While pre-trained models are already powerful, they can be further fine-tuned on specific datasets to adapt to particular tasks or domains (like medical, legal, or casual conversation). This process allows them to better understand domain-specific language and context, enhancing their comprehension abilities.

During fine-tuning, the model adjusts its parameters—essentially the 'dials' and 'knobs' that determine how it processes language—based on the new data it is exposed to. This is akin to how a student might refine their knowledge after receiving specialized training in a subject area.

6. Common Misconceptions: Do LLMs Truly Understand?

It’s easy to anthropomorphize LLMs and think they "understand" language like humans do. However, it's important to clarify that LLMs do not have consciousness or genuine understanding. They are pattern-matching machines that predict the next word in a sentence based on probabilities derived from training data. Their "understanding" is a sophisticated illusion created by mathematical models and vast datasets.

What LLMs lack is common sense and real-world experience. They don't "know" in the human sense; they compute probabilities and patterns. Thus, they might produce plausible but incorrect or nonsensical answers if prompted in the wrong way.

Conclusion: The Power and Limitations of LLMs

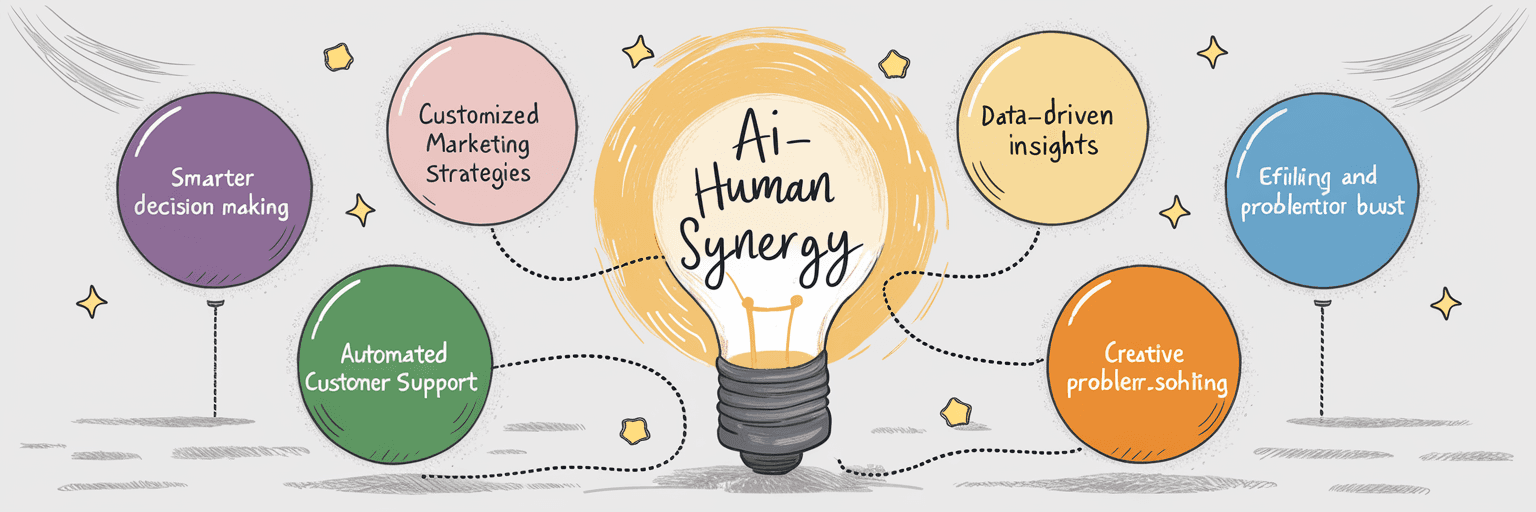

Large Language Models have transformed how we interact with technology, enabling more natural and engaging interactions. Their ability to understand words and context is a result of complex mathematics, clever engineering, and immense computational power. While they excel at many tasks, it's essential to remember they are tools created to assist humans, not replacements for human understanding.

From a Research-Driven Perspective:

LLMs represent a significant step forward in natural language processing and AI. While they bring remarkable capabilities, future research will focus on enhancing their understanding, reducing biases, and aligning them more closely with human values and needs.

By understanding the mechanisms behind LLMs, we appreciate their power and limitations, allowing us to use them effectively and ethically.